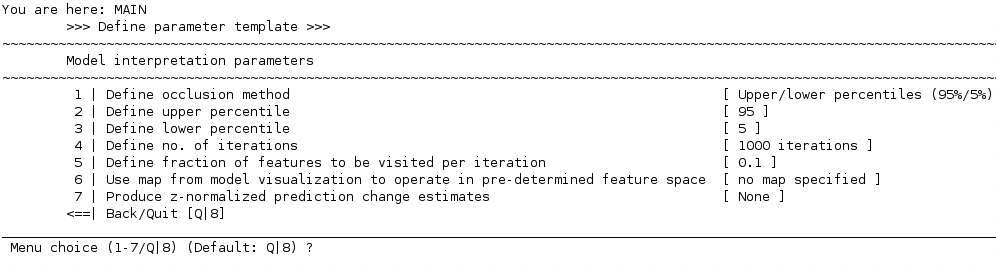

Prediction interpretation options#

To increase clinical significance, individual explanations for predictions are essential. In this regard, NeuroMiner offers different methods to obtain interpretable and transparent models.

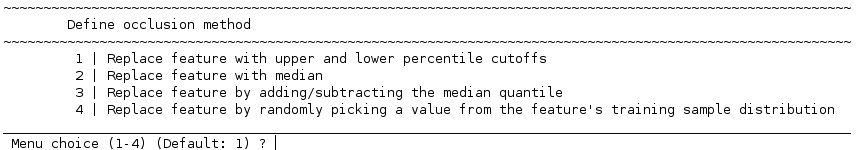

Define occlusion method#

Interpretability in NeuroMiner is based on occlusion methods where a fraction of features will be occluded and the change in prediction outcome in response to this occlusion will be quantified. Six different occlusion methods are available:

A fraction of the features will be replaced by 1) extreme values (upper and lower percentile that can be chosen by the user); 2) the median of each feature’s training sample; 3) the feature value with the median quantile added or subtracted (depending on whether that individual’s feature is below or above the training sample median); or 4) the feature value mirrored at the training sample median; 5) a random value from each feature’s training sample distribution; or 6) Shapley values using the kernel SHAP algorithm as implemented in MATLAB’s Shapley function.

Please note that the third method might not be suitable for ordinally scaled data. Moreover, if the number of features is very low the number of possible permutations will also be low. In these scenarios the fifth occlusion method, e.g. occlude by random value from each feature’s training sample, or Shapley should be selected to obtain reasonable results.

Note

Note that for high dimensional data, i.e. if the number of features exceeds half the number of iterations, Shapley might not lead to accurate results. We therefore strongly recommend the selection of features using a map from model visualisation and/or, in the case of neuroimaging data, linking the data modification to a neuroanatomical atlas.

Note

When used in intermediate fusion analysis, the number of iterations is automatically adjusted to the lowest number of iterations possible across all modalities for the first four occlusion methods. If this results in a too low number of iterations, NeuroMiner will give a warning and it is recommended to use one of the other two methods, i.e. “random” or “shapley”.

Define no. of iterations#

Here the number of iterations can be defined, i.e. how often a fraction of features should be occluded. The default value is 1024 but one has to keep in mind that this might be limited by the number of possible combinations, especially if the number of input features is low.

Define fraction of features to be visited per iteration#

Here the user can define the fraction of features to be occluded each iteration. If a mask is applied, this fraction is applied to the remaining features after applying the mask.

Define fraction of training samples to be visited per iteration (Shapley)#

For Shapley values, the features are masked by replacing them with the training data. In contrast to the “random” method, the features are not just randomly replaced by one value from the training data distribution, but are replaced several times by different training data. In the end, the mean value is taken. Here, the user can specify the proportion of training samples that are randomly selected for feature occlusion.

Normalize prediction change estimates at the case level#

It can be specified whether the prediction change estimates, i.e. the output of the interpretability module, shall be reported and plotted (in the MLI Viewer) normalized to group-level (i.e. option “None”), or mean centered, z-normalized or scaled between -1 and 1 at case-level.

Use map from model visualization to operate in predetermined feature space#

When the visualization module will be run, one can specify a map here, which will narrow down the feature space that will be evaluated by the interpretability module. The user can choose from different maps which will be created during visualization. If a map will be used, the user can threshold the map by defining a cutoff value or range, a cutoff method and a cutoff operator (e.g. to determine whether features below or above a specified cutoff value or within or outside a specified range should be included for prediction interpretation).

If you enable this option, the menu will show two additional suboptions in the menu:

7 | Define which map to use [Cross-validation ratio map] 8 | Define cutoff value (scalar or 2-value vector) [-2 2]

Produce z-normalized prediction change estimates#

It can be specified whether the prediction change estimates, i.e. the output of the interpretability module, shall be reported and plotted (in the MLI Viewer) as they are (i.e. option “None”), mean centered or z-normalized.

Bind data modification to neuroanatomical atlas#

This option is specific to neuroimaging data. Especially for image data the dimensions are not independent. Hence, it might be more meaningful instead of randomly modifying individual voxels to bind the manipulations to brain regions/systems. To define these brain regions/systems the user can read in a neuroanatomical atlas. This requires an atlas imaging file, i.e. a nifti image, and an atlas descriptor file listing the ROIs (a csv or txt file). For the atlas descriptor file, the user can specify the delimiter and the column names where the ROI IDs and the ROI names are specified. Several neuroanatomical atlases are provided within the NeuroMiner software folder (“/NeuroMIner/interpretable/anatomical”). When the files are correctly specified, the user needs to “Import atlas descriptor file“.